Cinematic Intelligence

for Your Images.

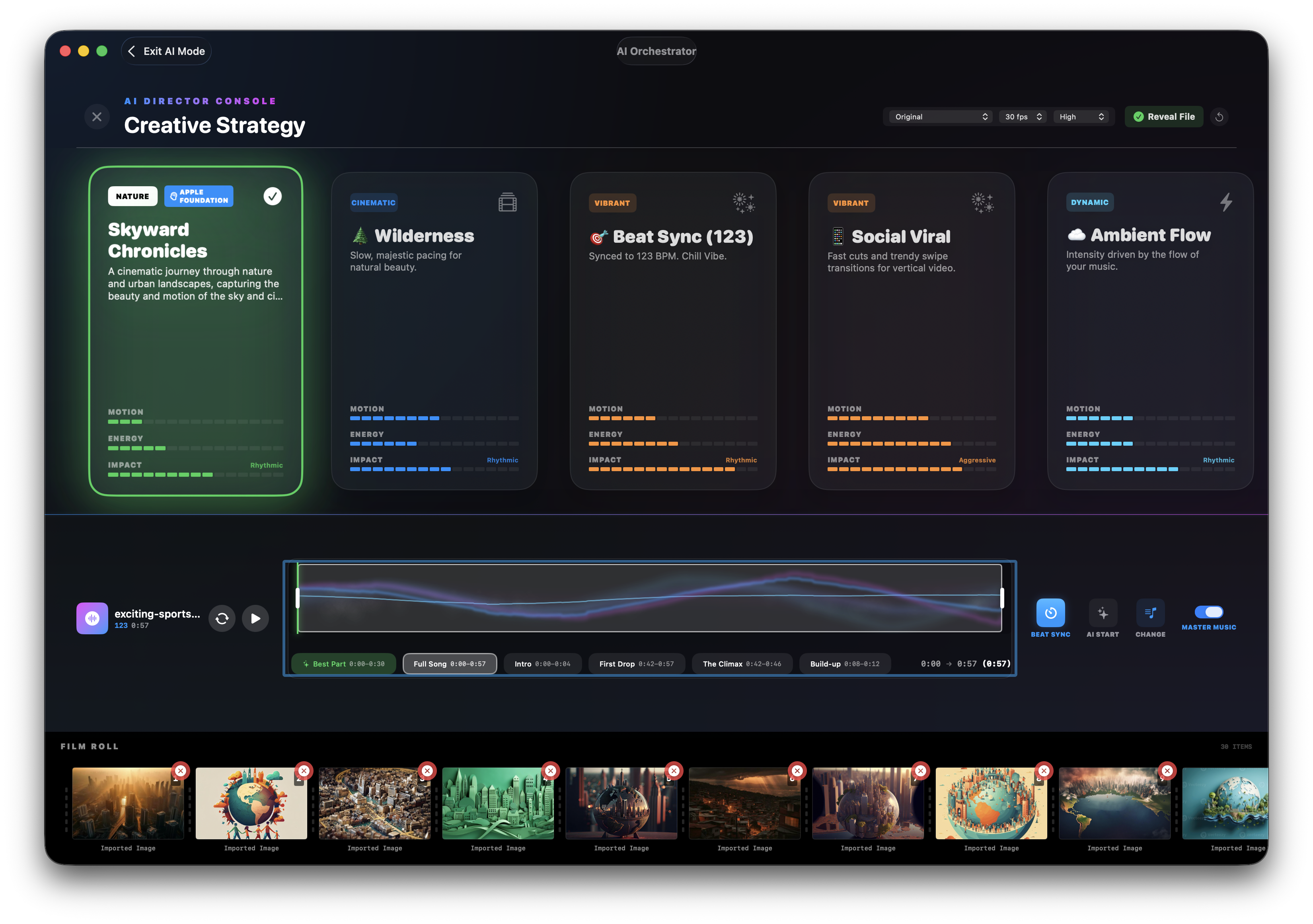

Drop photos. Pick a vibe. Your video cuts to the beat—automatically. No timeline, no editing, just AI-powered cinema on Apple Silicon.

Not Another Slideshow Maker

🎬 Music-Aware Intelligence

Most apps place images at fixed intervals. SteadyFlow listens to your music and places transitions on actual beats, downbeats, and song segments. 15 photos over a 2-minute song? The algorithm selects the 15 strongest musical moments automatically.

🧠 Per-Image AI Analysis

Every single image is analyzed: saliency detection finds the subject, horizon detection ensures level framing, aesthetic scoring identifies your best shots, and subject isolation creates depth masks for parallax effects.

✨ One-Click Cinema

No timeline. No keyframes. No manual adjustments. Drop your photos, pick a style card, and export. The AI Director handles pacing, transitions, color grading, and motion—all tuned to the emotional arc of your chosen music.

🔒 Truly Private

Everything runs 100% on-device. Your photos are analyzed by Apple's Vision framework and CoreML—never uploaded anywhere. No cloud. No subscriptions. No data leaves your Mac.

Powered by Apple Intelligence

On macOS Tahoe and later, SteadyFlow harnesses Apple Foundation Models running entirely on your device. The on-device LLM analyzes your photo collection and intelligently directs the cinematic style—choosing pacing, transitions, and emotional tone based on what it sees in your images.